Since ChatGPT at the latest, AI has been on everyone's lips. What was still science fiction a few years ago has become reality today. AI is increasingly penetrating almost all areas of life and determining many aspects of our everyday lives. As an IT company, we have been dealing with this topic since 2015 and use machine learning and artificial neural networks (ANN) in our projects. In addition, we have also realised successful AI models for different areas of application as part of our research activities with the universities in the region.

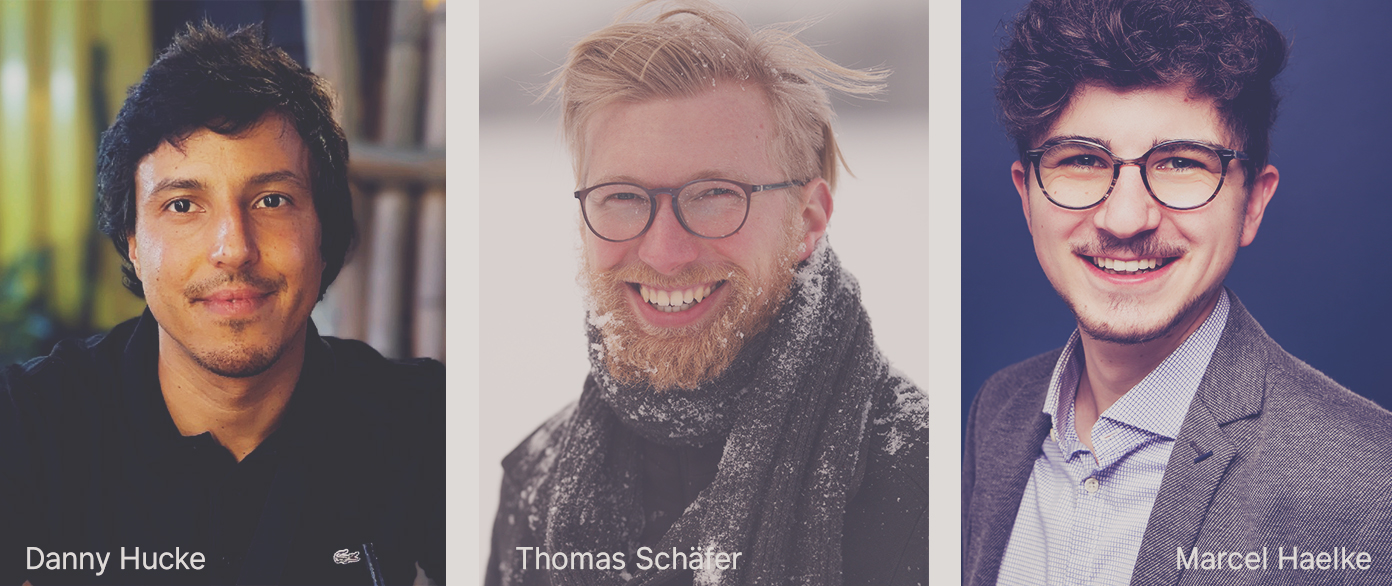

On the occasion of Artificial Intelligence Appreciation Day, we have now spoken to three of our employees. In the interview, they share their experiences and forecasts with us and give interesting insights into their work. Among other things, they talk about open source models, economic aspects and effects on areas within the IT industry.

We'll start with Danny Hucke, who takes on the roles of team leader for digital product design and scientific advisor. Danny has been working more intensively with AI for a while and we asked him for his assessment of the current situation.

I definitely see one of the main reasons for the rapid success in recent years as being the possibility of being able to scale up very quickly, more data, more parameters, more computing power, more memory. Even today, this is still associated with very high costs. The current AI revolution did not come about through fundamentally new theories or algorithms, because these have essentially already existed for decades. Computing power and scaling are clearly the decisive factors here.

Nevertheless, it is my experience that AI has not yet fully arrived in technical work. Of course, more and more developers here are using tools like ChatGPT for their research or when an SQL query is needed, for example. A handful of employees also regularly use GitHub Copilot - but it is not yet the majority. However, anyone who wants to do software development competitively in the next few years will not be able to do without AI systems - and that doesn't just apply to software development.

When you say not only for software development, which sectors do you have in mind specifically?

The first thing that comes to mind is the creative industry. That surprised me quite a bit, and probably many others as well, that the first jobs to be significantly affected are not assembly line jobs, but jobs that actually require an enormous amount of human creativity.

Basically, however, I see AI quite clearly as a time saver and enabler. The barrier to accessing previously rather complicated topics has been significantly weakened. The machine speaks not only my language, but also complex languages such as programming languages. The system is just as smart as before, but now I can tell it what to do.

These tools allow quick access to different things, combined with a variety of action options. For example, if you want to explore a new framework, simply ask ChatGPT. The fear of the so-called white sheet has become obsolete and overcoming the first hurdle has never been easier. Especially in the medical field, we are faced with possibilities that could well be described as revolutionary - Google is currently at the forefront of this. But even in everyday office life, many things will change; Microsoft, for example, is just equipping its entire office package with AI functions.

As far as software development is concerned, based on the current state of development, I don't see AI completely replacing us humans. It can mainly do things for us that don't require a lot of thinking, such as writing boilerplate code. Even more complex questions will most likely not be able to be outsourced to AI systems in the next 1-2 years. However, simple things like building simple data APIs or creating less complex websites are already excellently performed by AI models today.

These tools will also be used much more in the private sector, taking care of everything from travel bookings to food orders. The Plus version of ChatGPT already has a variety of plug-ins and connections, something that will increase significantly in the future.

These are actually quite good prospects. Do you also see risks in connection with AI that could come our way?

Of course, despite the advantages, there are also dangers - and you don't even need dystopias in which machines take over the world. The disinformation campaigns of recent years are a good example. With AI, it is now possible to scale these campaigns to unimagined sizes - and with machines that write on a human level. What used to require entire troll armies can now be done by small groups with AI programmes. With comparatively little effort, voices can also be generated that can hardly be distinguished from real people.

Development is not standing still and there are improvements every day. AI is already being used for various forms of scam, phishing and disinformation, and I believe that we are on the cusp here before things get really dicey. However, it should also be emphasised that these tools can also be used for other purposes, e.g. to protect against such attacks.

To what extent this has to do with the intelligence of these systems, or whether these systems are intelligent at all, is debatable. What they can do in any case is make plans. Everyone who has asked ChatGPT whether it can generate a step-by-step guide to a certain problem knows this - no matter how good the answer is. If the systems are given the opportunity to execute their plans in reality, e.g. by accessing other programmes, the internet, APIs, etc., a lot of damage can be done with the wrong intention.

In my view, however, we are still a long way from Artificial General Intelligence (AGI). This is the form of AI that, as an autonomous system, combines the cognitive abilities of humans with the computing power of computers. It should be noted that no one can explain how consciousness arises and which "algorithms" in our brain really lead to our cognitive abilities. Perhaps current models are not that far off. But I believe, without being able to prove it, that there are still important ingredients missing before machines really exceed human thinking abilities. I come from a mathematical background and I don't see in my conversations with ChatGPT, for example, that these models are close to finding solutions and proofs for complex mathematical problems as we humans can.

As I said, we are not yet at a point where AI behaves like a human brain, but the very possibility of this development holds dangers. No one can predict the future, but it is clear that the next few years will bring great changes and I see AI as an opportunity to enrich and improve our lives - both in our private lives and at work. The important thing here is not to blindly trust the systems and to check every solution. No matter whether it's code or financial tips: Never adopt something you don't understand.

Next we spoke to Thomas Schäfer, who works for us as a software engineer.

I already had my first contact with AI during my studies. Back then, I wrote my master's thesis on artificial intelligence. I enjoyed the topic a lot because there are so many areas of application in which AI can support us humans - and I say support quite deliberately. At the moment, I don't think AI could ever replace humans on a large scale in the same quality. In the area of pre-filtering, however, I see a very successful use case for AI support. This is exactly what my master's thesis was about. An AI model was trained to recognise hate speech on the internet and to pre-sort comments. This saves time and enables the moderators to work more efficiently.

How do you assess the current development?

Admittedly, I am quite amazed at how quickly progress has been made in the last five years - especially that open source models are increasingly able to match the success of the large commercial models. Basically, I'm not a fan of sharing company internals with an AI that you don't host yourself. The case of Samsung and ChatGPT comes to mind. These tools can help the software development process enormously, of course, but at what cost? Often, the data entered is used to train precisely this code interpreter. Awareness needs to be created here.

Basically, there is also the question of whether one wants to use open source models, which are getting better and better, but are still behind applications such as ChatGPT or GitHub Copilot in terms of competition. Do you want to use state-of-the-art technologies with all the associated advantages and disadvantages or wait a little longer until open source models have caught up?

An interesting comparison. What experience have you gained with such tools?

As a software developer, you always want to be up to date. I also use tools such as ChatGPT to explore how the whole thing works. At the moment, however, I only work with it in a private environment and even here only with fictitious data. Abstracting the data so that it can no longer be assigned would cost me more time in my work context than working out a solution myself.

Basically, I find all these applications incredibly exciting because you can do so much with them. However, I find it problematic that efforts are being made to merely trick an AI and that valuable (computing) time is being sacrificed for this purpose instead of using the AI productively.

AI tools will become increasingly important and will take over large parts of our work in software development - this transition must be considered. Especially in the case of huge amounts of data that could no longer be calculated with human resources, the use of AI offers considerable advantages in aggregating the data and thus drawing conclusions.

Recently, many articles have been circulating in the media about the dangers of AI - including doomsday scenarios. What is your assessment of this as someone who also deals with it professionally?

Basically, an AI only does exactly what it is taught - a neural network does not create new knowledge. Personally, I also find it difficult to use the word "learned" in this context. We are anthropomorphising something that is not human and is also fundamentally different in the way it works from what we understand as human learning. As far as our jobs as software developers are concerned, I don't see any risk from the use of AI, nor do I see any dystopias in which an AI takes over the world. Nevertheless, the dangers are real.

Much of what we do is already determined by AI. For example, the order of the posts displayed in social networks is created by an AI - and that is actually problematic. People show themselves successful in social media, which is also well clicked. This ensures that you are increasingly shown such posts. Every day we encounter a flood of successful and happy people on these platforms and our brains don't manage to switch off - we start comparing ourselves to utopias. We should avoid learning from social media and rather see it as a cautionary tale of AI ethics. I myself only use Mastodon now, rarely LinkedIn - I am always looking for ethical solutions.

Another problem I see is the flood of so-called fake sites created with AI tools by entire content farms. These sites deliver 100% AI-generated content and often serve niches - are provided with affiliate links that are more about making a quick buck instead of offering actual help. These are things that are real and we need to be aware of them. Overall, though, these are all fairly manageable dangers that we can comprehend with our human brains. Of course, it always depends on how these things are used, but overall, the opportunities outweigh the threats here and we should take advantage of them.

As far as our work in software development is concerned, AI tools will take over large parts sooner rather than later. Even today, we already use code scanners to increase code quality. This convenience also allows us to work on more complex and important issues, which is why software development will continue to be in human hands. These tools support our work and do not replace us - they will remain or become an integral part of the future company toolbox.

Let's now turn to Marcel Haelke, who heads our Data Engineering Team. We wanted to know from him what opportunities, but also dangers, he sees for data science as AI development progresses.

In general, I see the achievements in the AI field as a promising opportunity for data science. The development of the underlying algorithms is in itself pure data science. The further development of AI in general - especially with regard to machine learning algorithms - is data science in itself. However, I don't see that there will be a rapid replacement here and that there will soon be no more data scientists. Rather, we are gaining a powerful tool in this area. At the moment, for example, there is still a considerable manual effort in the provision and cleansing of test data. But with the increasing intelligence of AI systems, these tasks could of course be outsourced in the future.

The advances in the AI field fundamentally open up a multitude of new possibilities. Machines have the advantage that they have a neutral point of view and can therefore recognise more patterns as well as detect deviations from them. Their view is not limited to what is known to humans. In this respect, one could well venture the thesis that machines extend Data Science instead of replacing it. Whether it is business decisions or troubleshooting in various systems, AI support can make a significant advance here. Debugging will be simplified and most likely systems will also be developed that can independently search for error sources. In the long term, nothing will remain the same in the field of data science either. Many of the algorithms we work with today aim to feed precisely such intelligent systems.

Nevertheless, I don't think that developers will generally be abolished overnight. But AI can of course help speed up processes. These tools can already take over the writing of simple code or automatically generate and check classes, for example. Of course, this does not yet completely replace humans in software development, but one must also pay attention to how the competition uses these tools. Products can be offered with less work and possibly at a lower price, which is something that companies have to keep up with and also train their own developers on how to use these tools correctly. Of course, many things are still unclear here: which licences are subject to copyright, what happens to the generated code? Above all, it is important to be transparent with one's own customers.

As far as jobs are concerned, I don't see it coming so quickly that one or the other job will be eliminated because of AI. At the moment, we are still struggling with the shortage of skilled workers, and I think it is very unlikely that this ratio will be reversed in a short period of time so that we will have to get rid of skilled workers. Which does not mean that I rule out such a development in the long term. Overall, the whole issue is also a two-track matter. On the one hand, of course, it has the potential that at some point fewer developers will be needed because much can simply be outsourced or generated by AI systems. Also, fewer people will be needed for the remaining work, the operation or checking of the results of these tools. At the same time, however, it also opens up completely new project possibilities and this relief also enables people to take on and implement more projects on the side. So you are able to run many more projects in parallel, which of course requires more people.

An exciting approach. Apart from economic aspects, where do you see difficulties in dealing with AI that affect us as a society?

I see a bit of a danger in relying too much on the fact that you get a ready-made solution from these tools. Especially with things that you didn't write yourself, it's even more important to take a closer look to make sure that functionalities and security standards are implemented the way you want them to be.

This huge amount of data on the internet, which is used to train these algorithms, can of course also pose a risk. Among other things, there is an extremely large amount of false data and misinformation in circulation that could be spread in an even more targeted manner in the future. It is important to keep your eyes open here. How does the algorithm learn? Where does it get its information for queries? Can it be negatively influenced?

As a sustainability officer in the company, I naturally also think about the enormous amounts of computing power that these applications consume. Naturally, this also has an impact on our environment. Sure, AI tools can make a lot of things easier, but at what cost? How many emissions are generated, for example, when you let ChatGPT plan your holiday trip? I think that much more awareness needs to be created in this regard, not only in the IT industry, but also in private use. The more people use these tools, the more computing power is needed, and that releases new emissions. Depending on where these systems are located, security standards also play a major role and these systems can become both a weapon and a target.

Another risk I see is keeping AI-generated content distinguishable from human content. What is real and what is not? It's easy to be fooled here. Fake news has been a topic almost everywhere in recent years, and I see problematic developments here that could be intensified by AI. But perhaps the systems will also help us to recognise this content.

Last but not least, what do you think about robots taking over the world in the future?

I think that's just media hype at the moment. Which doesn't absolve you from always keeping in mind when developing these systems whether what you're working on can still be controlled or whether you understand how it works. Here I see a clear responsibility for developers. It is important to use the possibilities and to use them profitably for humanity.

We would like to thank our employees for these enlightening insights and can only echo that we see AI as a great opportunity to positively change our world. We are currently looking for new solutions that will benefit both our customers and our employees. Already today, our expertise in AI and machine learning gives us a big head start. Together with our customers, we are creating a smart future - through software development.